Video games have always been a vital source of entertainment to everyone. It is accessible, affordable, not time consuming and most importantly….very fun. As of 2024 there are around 3.3 billion gamers in the world. Whether it is competitive Fortnite or Subway Surfers, video games are a global phenomenon. One of the things that entices gamers today is the graphics of their favourite games.

Despite the enromous number of people enjoying video games, it is surprisingly unkown how exactly one is transported to fantasy lands, thrilling battlefields, hundreds of different environments

Table of Contents

- Evolution of Graphics

- Graphics rendering pipeline

-Overview

-Rendering Pipeline Stages

1) Vector Specification

2) Vector Shading

3) Tessellation

4) Vertex post processing

5) Primitive Assembly

6) Rasterization

7) Fragment Shader

8) Per Sample Operations - Ray Tracing

- Hardware of Game Graphics

- Future of Game Graphics

eVOLUTION OF gRPAHICS

Back when video games first dawned after the breakthrough of the famous pong game, the characters were made of blocks and these pixelated creations were the Kratoses, Spider-men and Lara Crofts of our games. Colours were as simple as children with crayons.

However game graphics have gone through revolution after revolution and thus allowed us to travel to these magical lands and meet unimaginable characters.

8 bit graphics-This method was used in the early 1980s and it involved of using pixels arranged in different ways to create characters and worlds. Games like Space invaders and Donkey Kong were made using 8 bot graphics.

16 bit graphics- It is an improved version of 8 bit graphics. They were more detailed and had a bigger option for colours. It had better resolution and enhanced sprite animation capabilities that facilitated smoother character movements and more complex in-game actions. They also used parallax scrolling to give an illusion of depth. Super Mario Bros and Sonic the Hedgehog were made using 16 bit graphics.

3D Graphics- 3D video game graphics use three-dimensional rendering techniques to create in-game environments and characters, providing a more immersive and realistic visual experience. These graphics represent objects and environments in three dimensions, adding an immersive edge to the pictures. Some of the games of 3d graphics are God of War: Chains of Olympus and Call of Duty: Black Ops.

HD Graphics- These graphics brought in an era of detailed immersive gaming. These are used even now and some examples are PS5 and Xbox 360 consoles. HD video game graphics resolution started from 720p (1280×720 pixels) and extended to 1080p (1920×1080 pixels) and beyond. Many HD games aim for higher frame rates, often 30 frames per second (fps) or more, providing smoother and more realistic motion during gameplay.

FarCry and Doom 3 were built using HD graphics.

Ultra HD- They have a much higher resolution and pixel count compared to HD graphics. The term “4K” specifically refers to a display resolution of approximately 4,000 pixels across the horizontal axis. The standard 4K resolution is 3840 x 2160 pixels, but it’s often colloquially referred to as 4K. The transition from standard definition (SD) to high definition (HD) and 4K and beyond was driven by the desire for sharper and clearer images.

Graphics randering pipeline

A) Overview

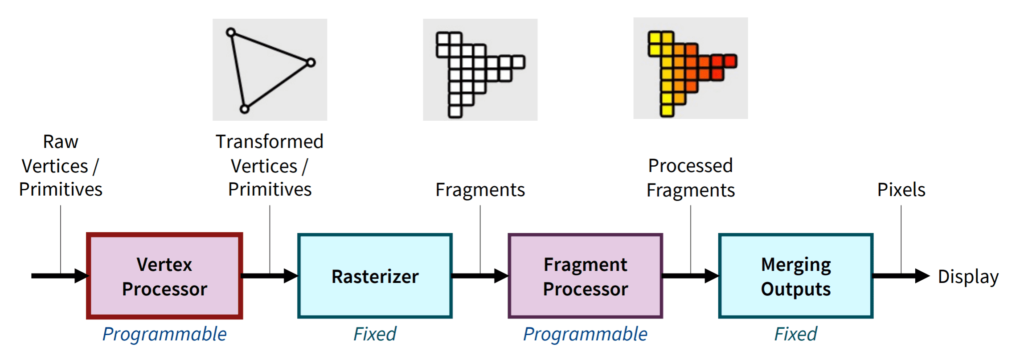

The Graphics Pipeline is the process by which we convert a description of a 3D scene into a 2D image that can be viewed. This involves both transformations between spaces and conversions from one type of data to another. graphics application programming interfaces(APIs), such as Direct3D, OpenGL and Vulkan were developed to standardize common procedures and oversee the graphics pipeline of a given hardware accelerator.

In modern video games, everything you see is made up of meshes, which are essentially collections of geometric shapes, and the simplest and most common of these shapes are triangles. Whether it’s the character models, landscapes, or intricate details like tree leaves or bricks on a wall, they’re all broken down into thousands or even millions of these small triangles. This geometric approach allows for high precision and flexibility, ensuring smooth, detailed, and realistic graphics.

The magic happens in a series of well-defined stages that make up the graphics pipeline. From the moment the game engine receives data about an object, to the time it’s displayed as a beautifully rendered scene, several complex operations work together to bring that digital world to life. The entire process requires enormous computational power to calculate how each triangle fits into the overall scene, how light interacts with it, and how textures map onto it — all in real-time, often at incredibly fast frame rates.

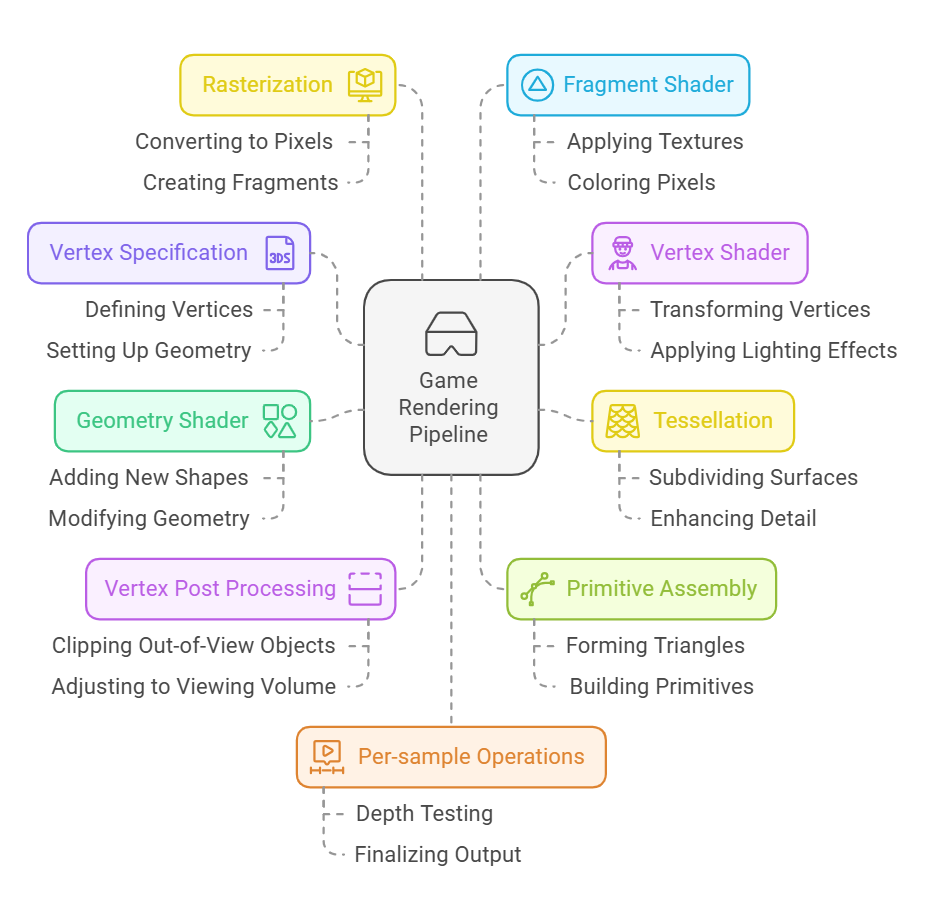

B) Stages of the rendering pipeline

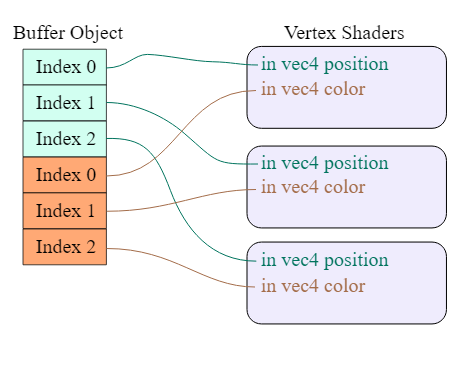

B.1) Vertex Specification

This is the very first step in the pipeline. It defines the positions and attributes of the corners of the geometric chapes that form the building blocks of any 3d game model.

Every object is represented as a set of vertices.

Attributes of vertices are:

- Position- 3D coordinates in (X,Y,Z) terms

- Colour- Maybe be influenced by an artifical light source or texture.

- Texture coordinates- how texture should be applied to the object.

- Normals- Vectors that indicate direction each vertex faces which helping in light calculations.

Vertices are organized into simple shapes like traingles that define geometry of objects. For example a model of a human might be represented by thounsands and millions od triangleseach defined by its 3 vertices.

A vertex buffer stores information about the vertices and their attributes. The data is passed to the next stage- the vertext shader

B.2) Vertex Shader

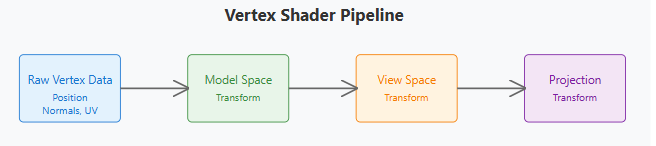

In this stage the raw vertex data is transformed and manipulated to prepare it for final rendering. This is written as a custom program using a shader langauge such as GLSL or HLSL.

The goal is to calculate the final position of each vertex in 3D space based on the camera view, lighting and transformations. It basically takes data and adjusts their location based on how the camera views the world.

- Transformation and Projection

Transforms the data from model space to world space. Then the world space vertices are transformed to camera view. The final projection is the conversion from 3D to 2D or an orthographic projection. - Vertex shader also calculates light information based on the vertex normal.

- After processing the vertex shader outputs the transformed vertex data which includes the final position of the vertext along with attributes.

B.3) Tessellation

Tessellation is a powerful yet optional stage in the pipeline that adds more detail to the 3D models making them smoother and more complex. This process divides larger geometric shapes into smaller triangles creating more polygons for smoother surface.

How it works

- Control shader- Its responsible for defining how much tessellation should be applied. It adjusts parameters which control the level of detail. It can dynamically change the tessellation based on distance from the camera.

- Tessellation Primitive Generator- Its responsible for actual sudivisions. The TPG divides the polygon into smaller polygons based on the TCS tessellation factors. Its effective in creating curved surfaces.

- Tessellation evalutator shader- It computes the final positions of the vertices for the newly created smalled polygons. TES ensures that the vertices of the subpolygons align correcly to the original shape. It can also calculate normals.

Once tessellation is done the refined vertices are sent down the pipeline.

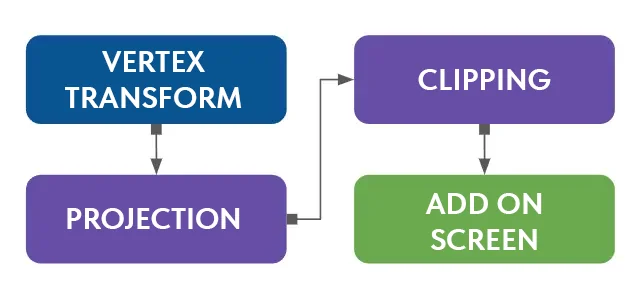

B.4) Vertex post processing

The developers have limited to no control over its execution. It still however plays a crucial role in preparing the geometry for the next stages of the pipeline. The most important part of Vertex post processing is Clipping, which discards geometry that is outside the defined viewing volume ensuring that only the relevant parts of a scene are processed further.

Clipping is the process of removing parts of primitives that lie outside the viewing volume- the space that defines what the camera can see. This helps optimize the rendering process by ensuring that only visible geometry is processed further.

Viewing volume is a 3D region that defines the bounds of the vision of the camera. If a part of the triangle lies outside this it will be clipped off. The remaining portion is passed on to the next stage.

Example:

If a triangle is partially outside the viewing volume, the part that is inside is kept, and the parts outside the volume are discarded. In cases where only part of a primitive is outside, the clipping stage might also divide the primitive into smaller pieces that are still within the viewable area.

Other tasks

Viewport transformation- Adjustment in geometry to fit the final screen is done by viewport transformation.

Perspective Correction- Ensures that the geometry is correctly transformed to account for the perspective of the camera

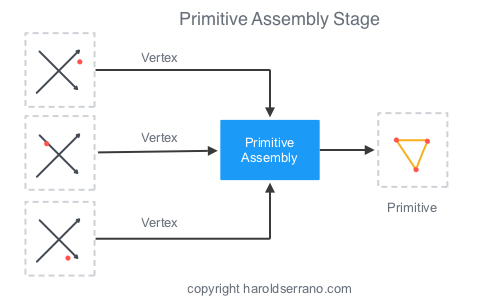

B.5) Primitive Assembly

After Vertex Post Processing the Primitive Assembly stage comes into play. This is a transition where vertex data is grouped into geometric primitives. Its role is to prepare the geometry for Rasterization by organizing vertices into proper primitive shapes.

Vertex Grouping: This stage takes the processed vertex data and groups it into a sequence of primitives. The type of primitive depends on how the geometry is defined (e.g., points, line strips, or triangles).

Handling Connectivity: For primitives like line strips or triangle strips, the stage determines how consecutive vertices connect to form the final shapes.

Ordering: Ensures that the vertices are organized in the correct order for rendering.

Discarding Degenerate Primitives: Any invalid or incomplete primitives (e.g., a triangle with all vertices at the same position) are discarded at this stage.

Primitive Assembly works with the topology of the geometry to determine how the vertices are grouped:

- Point List: Each vertex is treated as a separate point.

- Line List: Each pair of vertices forms a line.

- Triangle List: Every group of three vertices forms a triangle.

- Triangle Strip: A connected strip of triangles, where each new vertex extends the previous triangle.

B.6) Rasterization

Rasterization is the process of taking a vector graphics image which is made up of shapes and converting it into a raster image which is made up of pixels or dots for output on a video display or printer or for storage in a bitmap file format.

Rasterization is one of the most critical steps in the rendering pipeline, where 3D primitives are converted into fragments, which are basically potential pixels on the 2D screen. This stage bridges the gap between the geometric data of a 3D world and the pixel-based display of a monitor.

- Projection: Mapping

The 3D scene is projected onto a 2D screem space using the camera’s perspecitve.Every vertex of the primitive is mapped from its normalized device coordinates (NDC) to the viewport coordinates. The result is a 2D representation of the scene that can fit onto the screen. - Primtive Descretizationn

The rasterizer determines which pixels are covered by each primitive. Triangles, the most common primitives, are divided into discrete fragments based on their coverage. - Generating Fragments

They are potential pixels. Each fragment has position, depth and attributes. - Interpolation

Rasterization uses interpolation to calculate the values of attributes

How GPU optimizes rasterization

- Parallel processing

GPU divides the screen into tiles and processes them individually. This allows rasterization stage to handle multiple primitives. - Heriachial Rasterization

GPU uses this to quickly discard primitives that do not contribute to the final image, saving time by skipping unnecessary computations. - Depth Testing

This eliminates fragments that are hidden behind other geometry before additional processing.

In VR rasterization ensures minimal latency by rendering 3D scenes in real time. Rasterization helps overlay 3D objects on real world environments in AR applications.

Imagine you’re painting a triangle on graph paper:

- Projection: You draw the triangle in perspective on the graph paper.

- Primitive Discretization: You decide which squares (pixels) the triangle touches.

- Interpolation: For each square, you blend the colors or textures of the triangle’s corners to decide the color of the square.

- Output Fragments: These squares are your final pixels, ready to form the triangle on the paper.

B.7) Fragment Shader

This is the stage where each fragment produced in rasterization is processed to determine its final colour and appearance. This stage is vital for adding the richness and realism like shading, texture and lighting.

The process involves:

Determining the colour based on properties like texture and lighting.

Calculating transparency

Applying effects like shadows and reflections.

How it works

- The fragment shader receives data from the rasterization stage.This inclues the values of colours, texture coordinates and normals and the positions of the fragments and the depth information.

- The shader program typically written in GLSL runs for each fragment. The operations include accessing data to map onto the fragment, lighting calculations using models like Phong and colour manipulations.

The shader outputs the colour to be displayed onto the screen, depth for visibility and accuracy and advanced features.

Examples of texturing- Applying a brick texture to a wall and mapping a character’s face.

Examples of lighting effects- Directional light using artifical sun and point light using lamp.

Special effects- details to surfaces, transparency like in glass or water and reflections.

The fragment shader allows a sense of realism and optimization.

B.8) Per-Sample Operations

The Per-Sample Operations stage ensures only the necessary fragments contribute to the final image. It applies visibility tests, blending, and anti-aliasing to refine the output.

- Scissor Test: Removes fragments outside a specific rectangular area.

- Depth Test: Keeps only the closest fragments visible to the camera.

- Blending: Combines colors for effects like transparency or glowing.

- Multisampling: Smooths edges with anti-aliasing for better image quality.

The Per-Sample Operations stage concludes the rendering pipeline. After this, the final image is displayed on the screen via the framebuffer. This stage encapsulates all the necessary checks and refinements, ensuring optimal performance and high-quality visuals.

This concludes the basic working of how video game graphics function and how they are implemented on our screens bringing to life our favourite video games and worlds.

Some additional concepts about video game graphics are explained below.

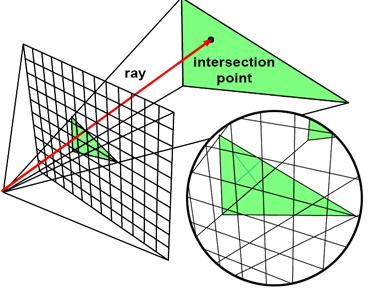

Ray tracing

Imagine you’re standing in a large, mirrored room with a flashlight. When you turn on the flashlight:

- Light Rays: The flashlight beams out light rays, just like the virtual light in a 3D scene.

- Reflection: Some rays hit a mirror and bounce off, showing reflections from various angles.

- Shadows: If you place an object in the beam’s path, it blocks some light, creating a shadow on the walls.

- Transparency and Refraction: If the object is glass, part of the light passes through but bends (refracts), showing distorted views of what’s behind it.

- Color: The walls reflect different hues depending on their paint, just like surfaces in a scene absorb and reflect colors.

Ray tracing works similarly but in reverse: Instead of emitting light from a source, it traces the path of rays backward from the camera to find out what they hit.

It is a method that simulates the lighting of a scene by rendering phyicsally accurate properties of light. It generates computer graphics by tracing the path of light from the viewing camera through the 2D plane into the 3D space and back to the source of light. The light may reflect , be blocked or get refracted and all of these are accurately simulated.

- Ray Casting- is the process that shoots one or more rays from the camera in an image plane. If a ray pasdsing through a pixel into the 3D hits a primitive then the distance from the primitive to the camera is determined and this provides the depth. The ray may also bounce off and collect data from other objects.

- Path Tracing is a more intense form that traces thousands of rays and follows through numerous bounces prior to reaching the source of light.

- Denoising Filtering- Denoising filtering is a technique used to remove noise from rendered images, particularly in ray tracing. By averaging or blending pixel values intelligently, it smooths out unwanted artifacts caused by incomplete sampling. Advanced methods, like AI-based denoisers, enhance image clarity and detail while preserving edges and textures for realistic visuals.

Graphic hardware

A GPU stands for Graphical Processing Unit. It is essentially a circuit that can perorm mathematical calculations at high speed. Computing tasks like rendering, AI models and editing requires these operations on large datasets.

An important difference between a GPU and a CPU is that GPU is designed to handle and accelerate graphics workloads and display graphics content on a device such as a PC.

How does it work?

Modern GPUs contain many multiprocessors, each having a shared memory block, plus many processors and corresponding registers. GPUs can be standalone chips known as discrete chips or integrated with other computing hardware known as integrated GPUs.

Nvidia Graphics card

NVIDIA RTX graphic cards are state-of-the-art GPUs designed for real time ray tracing and AI graphics. Built on the NVIDIA Ada Lovelace or earlier architectures (e.g., Turing, Ampere), these cards bring cinematic-quality visuals to gaming, professional design, and simulation.

It has AI powered cores for deep learning and denoising. It has an AI upscaling feature using DLSS technology that enhances frame rate. It is widely used for gaming and 3D modeling.

Popular models are RTX 4060, 4070, 4080 and 4090 for gaming and A-Series for professional tasks.

Future of Video game graphics

Companies like Nvidia have led groundbreaking advancements in the world of video game graphics making the gamers experience a range of fascinating and capturing worlds and environments that helps them escape from reality into a world which feels just as real but with endless possibilities.

These companies have made graphic cards for high fidelity graphics. We will see ML, AL and natural language power futurisitc game design. We might be able to walk around a world and have a believable conversation with a human being.

As the chips get advanced we will be able to process a larger datase. Visual Reality will be driven by newer concepts like ray tracing.

There have been brilliant demonstrations of real time ray tracing which may revolutionize how we think of video game graphics.